Associate Professor with Indefinite Tenure

Carnegie Mellon University, Pittsburgh, PA USA

I am a tenured Associate Professor of Computer Science in the Software and Societal Systems Department in the School of Computer Science at Carnegie Mellon University in Pittsburgh, PA, USA. I am also affiliated with the Human-Computer Interaction Institute at Carnegie Mellon University. I am an Affiliate Professor at the Information School at the University of Washington in Seattle, WA, USA..

From January 2006 until June 2022, I was a Principal Researcher at Microsoft Research in Redmond, WA, USA.

I run the VariAbility Lab. Our research mission is to create inclusive workplaces where all people, especially those with disabilities and the neurodivergent, can be successful, without discrimination. Our current research projects focus on creating accommodative physical work environments, collaboration tools that facilitate communication between people with differing abilities, and educational programs that teach communication skills and improve social relationships between team members.

I am not recruiting any new PhD students right now. If you live in the USA, please urge your senators and congresspeople to continue funding scientific research in this country! Once funding stabilizes, I will recruit more PhD students. I am recruiting undergraduate students at CMU to work with me for 2025-2026 school year. If you are interested in working in my lab, please get in touch! Include your CV and a brief description of the research you'd like to pursue.

In the past, I applied HCI techniques to study and improve the software development process. I studied biometrics and affect-based software engineering, social media for software engineering, collaborative software development, Agile methodologies, developer-centric knowledge management, flow and coordination, and K-16 and beyond programming education.

I received my Ph.D. in from the University of California, Berkeley in December 2005. I studied with Susan L. Graham. My dissertation was about voice-based programming, how to build a development environment that supports it, and how well programmers can use it. It is intended for programmers with repetitive strain and other injuries that make it difficult for them from using the keyboard and mouse in their daily work. For the quick punch-line, read my dissertation abstract below.

At MIT, I received a Master of Engineering degree in Computer Science in 1997 and a Bachelor of Science degree in Electrical Engineering and Computer Science in 1996. I worked on StarLogo, a programmable modeling environment designed to help students learn about science. StarLogo runs via Java on PCs, Macs and Unix machines. A newer version of StarLogo, called StarLogo Nova, incorporates graphical block-based programming and a 3D turtle world to teach programming by enabling kids to create their own games and simulations.

I grew up in southeastern New York, in Rockland County. I've lived in NY, Boston, San Francisco, Seattle, and Pittsburgh. I live with my husband, Ben, 2 kids, and 2 dogs.

Carnegie Mellon University, Pittsburgh, PA USA

Carnegie Mellon University, Pittsburgh, PA USA

Microsoft Research, Redmond, WA USA

University of Washington, Information School, Seattle, WA USA

Microsoft Research, Redmond, WA USA

University of Washington, Information School

Microsoft Research, Redmond, WA USA

Xerox PARC Computer Science Laboratory, Palo Alto, CA

USA

Supervisor: Michael Spreitzer

(Undergraduate Research Opportunities Program)

MIT Media Lab, Cambridge, MA USA

Supervisor: Mitchel Resnick

NYU Medical Center: Institute for Environmental Medicine,

Sterling Forest, NY USA

Supervisor: Toby G. Rossman

NY Medical College: Biochemistry and Molecular Biology,

Valhalla, NY USA

Supervisor: Yuk-Ching Tse-Dinh

Mnematics Videotex, Inc., Sparkill, NY USA

Master of Engineering in Electrical Engineering and Computer Science

Massachusetts Institute of Technology

Advisor: Mitchel Resnick

Bachelor of Science in Computer Science and Engineering

Massachusetts Institute of Technology

Advisor: Mitchel Resnick

Rank: 2 out of 443, Average 102.7

Ramapo Senior High School, Spring Valley, NY

I have worked with many very smart researchers, students, and visitors at Berkeley, Microsoft, and CMU.

You can see a list of my current students on my VariAbility Lab homepage.

I run the VariAbility Lab. Our research mission is to create inclusive workplaces where all people, especially those with disabilities and the neurodivergent, can be successful, without discrimination. Our current research projects focus on creating accommodative physical work environments, collaboration tools that facilitate communication between people with differing abilities, and educational programs that teach communication skills and improve social relationships between team members.

In practice, we combine human aspects of software engineering with human-computer interaction methods to understand and improve communication and working relationships between people with differing abilities and cognitive neurotypes (e.g. autistic and non-autistic people or people who are blind and or have low vision with sighted people).

Some of my current projects include

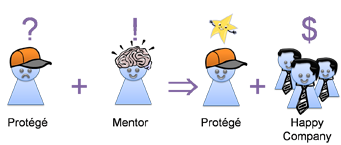

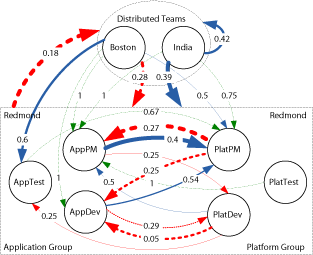

In the past, I have explored how technology and AI can play in a role in extending the capabilities and enhance the quality of life for people with disabilities. I looked at ethics practices in the development of AI and applied HCI techniques to study and improve the software development process. I have also studied biometrics and affect-based software engineering, social media for software engineering, collaborative software development, Agile methodologies, developer-centric knowledge management, flow and coordination, and K-16 and beyond programming education.

We use social networking to connect people and artifacts in software development-related repositories.

Deep Intellisense is a Visual Studio 2008 plugin that surfaces information from various silos (source control, bug tracking, mailing lists, etc.) to provide developers with instant context-sensitive feedback on any source code they are reading in the editor. Deep Intellisense works with Visual Studio Team Foundation System projects (such as those hosted on CodePlex), email archives (from Outlook) and Sharepoint sites.

Programmers who suffer from repetitive stress injuries find it difficult to program by typing. Speech interfaces can reduce the amount of typing, but existing programming-by-voice techniques make it awkward for programmers to enter and edit program text. We used a human-centric approach to address these problems. We first studied how programmers verbalize code, and found that spoken programs contain lexical, syntactic and semantic ambiguities that do not appear in written programs. Using the results from this study, we designed Spoken Java, a semantically identical variant of Java that is easier to speak. Inspired by a study of how voice recognition users navigate through documents, we developed a novel program navigation technique that can quickly take a software developer to a desired program position.

Spoken Java is analyzed by extending a conventional Java programming language analysis engine written in our Harmonia program analysis framework. Our new XGLR parsing framework extends GLR parsing to process the input stream ambiguities that arise from spoken programs (and from embedded languages). XGLR parses Spoken Java utterances into their many possible interpretations. To semantically analyze these interpretations and discover which ones are legal, we implemented and extended the Inheritance Graph, a semantic analysis formalism which supports constant-time access to type and use-definition information for all names defined in a program. The legal interpretations are the ones most likely to be correct, and can be presented to the programmer for confirmation.

We built an Eclipse IDE plugin called SPEED (for SPEech EDitor) to support the combination of Spoken Java, an associated command language, and a structure-based editing model called Shorthand. Our evaluation of this software with expert Java developers showed that most developers had little trouble learning to use the system, but found it slower than typing.

Although programming-by-voice is still in its infancy, it has already proved to be a viable alternative to typing for those who rely on voice recognition to use a computer. In addition, by providing an alternative means of programming a computer, we can learn more about how programmers communicate about code.

Harmonia is an open, extensible framework for constructing interactive, language-aware programming tools. Harmonia is a descendent of our earlier projects, Pan and Ensemble and utilizes many analysis technologies developed for those projects. Harmonia includes an incremental GLR parser (which admits a more natural syntax specification than LR), a static semantic analysis engine, and other language-based facilities. Program source code is represented by annotated abstract syntax trees augmented with non-linguistic material such as whitespace and comments. The analysis engine can support any textual language that has formal syntactic and semantic specifications. The incremental nature of the analysis supports a history mechanism that is used both for history-based diagnostic information and for contextual rollback. New languages can be easily added to Harmonia by giving the system a syntactic and semantic description, which is compiled into a dynamically loadable extension for that language. Among the languages for which descriptions exist are Java, Cool (a teaching language), XML, Scheme, Cobol, C, and C++. Other languages are being added to Harmonia as resources permit.

The language technology implemented in the Harmonia framework is being used in two current research projects: support for high-level interactive transformations and programming by voice. Our research in interactive program transformations focuses on the problem of programmers' expression and interaction with a programming tool. We are combining the results from psychology of programming, user-interface design, software visualization, program analysis, and program transformation to create a novel programming environment that enables the programmer to express source code manipulations in a high-level conceptual manner. Programming by voice research augments traditional text editing by allowing the developer dictate chunks of program source code as well as verbalize high-level editing operations. This research helps to lower frustrating barriers for software developers that suffer from repetitive strain injuries and other related disabilities that make typing difficult or impossible.

Harmonia can be used to augment text editors to robustly support the language-aware editing and navigation of documents, including those that are malformed, incomplete, or inconsistent (i.e. the document can remain in that state indefinitely). We have integrated Harmonia into XEmacs by creating a new Emacs "mode" that provides interactive, on-line services to the end user in the program composition, editing and navigation process.

StarLogo is a programmable modeling environment for exploring the workings of decentralized systems -- systems that are organized without an organizer, coordinated without a coordinator. With StarLogo, you can model (and gain insights into) many real-life phenomena, such as bird flocks, traffic jams, ant colonies, and market economies.

In decentralized systems, orderly patterns can arise without centralized control. Increasingly, researchers are choosing decentralized models for the organizations and technologies that they construct in the world, and for the theories that they construct about the world. But many people continue to resist these ideas, assuming centralized control where none exists -- for example, assuming (incorrectly) that bird flocks have leaders. StarLogo is designed to help students (as well as researchers) develop new ways of thinking about and understanding decentralized systems.

StarLogo is a specialized version of the Logo programming language. With traditional versions of Logo, you can create drawings and animations by giving commands to graphic "turtles" on the computer screen. StarLogo extends this idea by allowing you to control thousands of graphic turtles in parallel. In addition, StarLogo makes the turtles' world computationally active: you can write programs for thousands of "patches" that make up the turtles' environment. Turtles and patches can interact with one another -- for example, you can program the turtles to "sniff" around the world, and change their behaviors based on what they sense in the patches below. StarLogo is particularly well-suited for Artificial Life projects.

StarLogo TNG is The Next Generation of StarLogo modeling and simulation software. While this version holds true to the premise of StarLogo as a tool to create and understand simulations of complex systems, it also brings with it several advances - 3D graphics and sound, a blocks-based programming interface, and keyboard input - that make it a great tool for programming educational video games.

Through TNG we hope to:

My publications can be found here, sortable by year and filterable by topic. I have also listed my workshops and invited talks.

Education in computer science has always been important to me.

In Spring 2025, I'll be teaching Startups for the second time: 17-356 Software Engineering for Startups. I also created a course on accessibility with Patrick Carrington: 05-499 Celebrating Accessibility

In 2024, I created a course on AI, communication skills, and teamwork for autistic community college students. It's called Preparing Autistic Students for the AI Workforce. We're running it again this April! Apply today!

From 2020-2023, Paige Rodeghero and I taught a Summer video game coding camp for autistic teenagers. We focus our efforts equally on teaching video game design, video game development, and communication skills necessary for working in teams.

Students spend the first week learning about the game design and development process and the history of video games, and the second week building a game prototype in teams of 2-3 students. At the end of the camp, they present the games they built to the class and to their families.In the Spring quarter of 2018, I taught INFO 461: Collaborative Software Development at the Information School at the University of Washington.

In the Winter quarter of 2013, I taught INFO 461: Cooperative Software Development at the Information School of the University of Washington. I taught students how to work in 3-5 person software development teams to design, build, and market a product called Psychics of the Future. Loosely based on web-based advertising, the product had to enable psychics to sign up and upload fortunes which would then be posted to customers' Facebook and Twitter feeds.

Along with Steven Wolfman, Daniel D. Garcia and Rebecca Bates, I led workshops on Kinesthetic Learning Activities, physically engaging classroom exercises that teach computer science concepts.

In grad school, I taught an after-school program to four 8th grade boys to teach them about complex systems and how to program in StarLogo. At first it was slow-going for some of the kids, but by the end, all really understood programming, and half of them understood complex systems!

In the Spring semester of 2001, I co-designed and co-taught CS301: Teaching Techniques for Computer Science, with Dan Garcia. CS301 is a class to teach first-time TAs in the Computer Science Division how to be the greatest TAs they can be. It was truly awesome.

In the Spring semester of 2000, I helped out my friend Laura Allen by being a workshop leader for the TechGyrl's '99 program. I put together a collection of ideas (that I snagged and rearranged from Gary Stager's Logo page) for MicroworldsLogo and LegoDacta.

In Spring 2000, I TAd (GSId) CS164, a class called Introduction to Compilers. It's for junior/senior-level Berkeley undergrads.

In Fall 1997, I TAd CS61a, the introductory CS course at Berkeley. It's taught by Brian Harvey. CS61a is a Berkeley port of 6.001, which I took at MIT in 1993. It uses the Scheme programming language to introduce students to the zen of programming. After this course, learning any other programming language is cake.

Co-taught with Fraser Brown

Co-taught with Somayeh Asadi, Rick Kubina, Taniya Mishra, Matthew Boyer, Jiwoong Jang, Rory McDaniel, and Aidan San

Co-taught with Patrick Carrington

Co-taught with Michael Hilton

Co-taught with Paige Rodeghero

Co-taught with Paige Rodeghero

Co-taught with Paige Rodeghero

Course Instructor

Course Instructor

Co-taught with Daniel D. Garcia

Graduate Student Instructor. Course taught by Alexander Aiken and George Necula

Graduate Student Instructor. Course taught by Brian Harvey

I would be happy to speak with you about my research, CMU, or any questions you have about your own work.

Please come my office at TCS 441.

TCS Hall is at 4665 Forbes Ave. Pittsburgh, PA 15213.